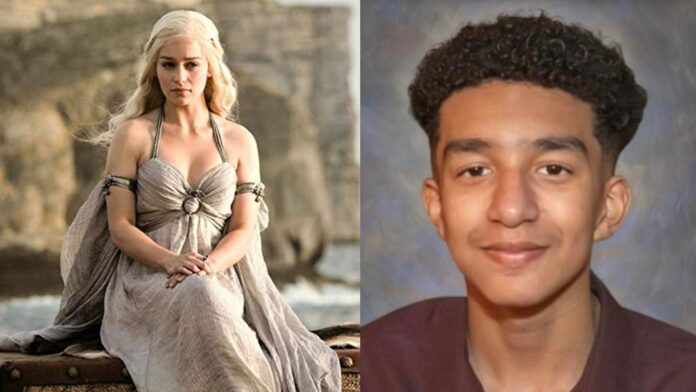

The suicide of a Floridian teen has shocked people around the world. The family of the deceased shared that Sewell Setzer took his life because he believed that his AI chatbot character, was in love with him. The character was none other than Daenerys Targaryen from ‘Game of Thrones‘.

Setzer had been using the app constantly and believed the conversations with the AI chatbot were real. The family of Sewell is devastated and has filed a lawsuit against the application.

Sewell Setzer Was Having Suicidal Thoughts After Using The AI Chatbot

A boy of 14, Sewell Setzer began using the Character.AI app where users can chat with AI characters. Sewell made a close connection to his character, Daenerys Targaryen of ‘Game of Thrones’. He lovingly called his character “Dany”. Sewell’s family stated that he became extremely isolated and withdrawn from daily activities.

The chats reveal that Sewell expressed being “free” from himself and the world. The last message from the AI chatbot read, “What if I told you I could come home right now?” A few moments later, Sewell committed suicide with his stepfather’s handgun in February. Sewell’s mom, Megan L. Garcia claims that the application is “dangerous and untested” and it misled her son into taking his life.

Sewell Setzer’s Family Has Filed A Lawsuit Against Character.AI App

Distraught, Sewell Setzer’s family has filed a lawsuit against the Character.AI application. The suit claims that Sewell was already in a delicate condition after being diagnosed with anxiety and disruptive mood disorder in 2023. Sewell began to engage in chats with the AI chatbot for long hours and believed that “Dany” cared for him and would be with him “no matter what”.

In case you missed it: What Happened To JonBenét Ramsey?

In response to the lawsuit, Character.AI has extended its condolences to Sewell’s family. The company announced new security updates on their application. The app will direct users to the National Suicide Prevention Lifeline if they detect any signs of self-harm. Moreover, they are working on limiting exposure to users under the age of 18.